Fast Fourier Transformation

| Time (s) | Force (N) |

|---|---|

| 0 | 0 |

| 0 | 0 |

| 0 | 0 |

| 0 | 0 |

| 0 | 0 |

Introduction:

What is a Fourier Transform?

Imagine you have a complex sound, like a musical chord. The chord is a single signal, but it’s made of multiple notes (frequencies) played together. The Fourier Transform is like a magic decoder that listens to the chord and tells you which notes (frequencies) and how loudly (amplitude) each is present. In general, the Fourier Transform breaks a signal into a sum of sinusoidal waves (sines and cosines) of different frequencies. Each sinusoid has a certain amplitude and phase, and adding them up reconstructs the original signal. This is analogous to how a recipe’s ingredients combine to create a dish – Fourier analysis tells us the “recipe” of frequencies in our signal.

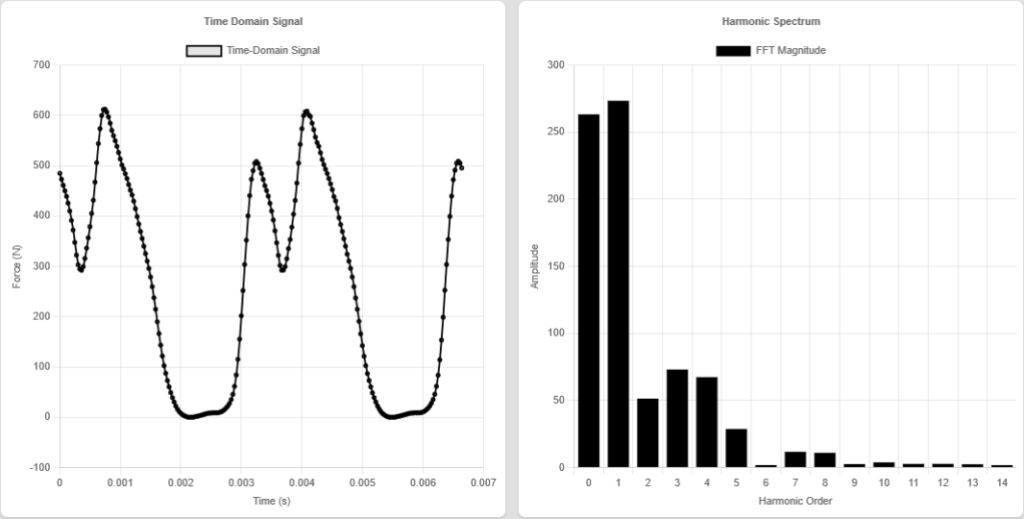

One way to build intuition is to think of time-domain vs. frequency-domain representations. The time-domain is how a signal changes over time (what you’d see on an oscilloscope or audio waveform). The frequency-domain is like a “spectrum” of the signal – it shows the signal’s energy or amplitude at each frequency (like a bar graph of which frequencies are present). For example, a pure sine wave at 440 Hz (the musical note A) in the time domain corresponds to a single spike at 440 Hz in the frequency domain. A more complex signal will have multiple spikes or a continuous range in the frequency domain representing its composition.

Breaking signals into frequencies isn’t just a mathematical curiosity – it’s extremely practical. Engineers and scientists use frequency-domain analysis to understand and process signals more easily. For instance, filtering out noise from audio is often simpler in the frequency domain by removing unwanted frequency components. Likewise, in communications, analyzing signals in the frequency domain helps design radios and WiFi that separate different channels. The Fourier Transform is the foundation for these techniques, acting as a translator between the time domain and frequency domain.

A Bit of History and Motivation

The concept of representing signals as sums of sinusoids dates back over two centuries. In the early 1800s, Joseph Fourier hypothesized that any periodic signal could be expressed as a sum of sine and cosine waves. He introduced Fourier series while solving the heat equation, using trigonometric series to represent heat distributions.

This was a revolutionary idea: even seemingly arbitrary waveforms could be built from simple oscillating components. Fourier’s insight laid the groundwork for what we now call Fourier analysis.

As mathematics and engineering progressed, the idea extended beyond periodic signals. The Fourier Transform generalized Fourier’s idea to non-periodic signals (using integrals instead of sums), allowing us to handle arbitrary signals and break them into continuous spectra of frequencies. With the advent of digital computing and the need to analyze discrete data (like digital audio or sampled sensor readings), the Discrete Fourier Transform (DFT) became crucial. However, a direct computation of the DFT was computationally expensive for long signals, requiring on the order of N² operations for N data points. In the 1960s, as scientists started using computers to process signals, this slow computation became a serious bottleneck.

The Fast Fourier Transform (FFT).

In 1965, James Cooley and John Tukey published an algorithm that could compute the same DFT result much faster. This was a game-changer. Suddenly, tasks that were impractical became feasible – spectrum analysis, digital filtering, image processing, and more could be done on much larger datasets in reasonable time. (It turns out the FFT wasn’t entirely new: the mathematician Carl Friedrich Gauss had actually discovered a similar method back in 1805, but it remained unnoticed in his unpublished work!). The FFT’s discovery and popularization in 1965 transformed digital signal processing. In fact, mathematician Gilbert Strang described the FFT as “the most important numerical algorithm of our lifetime,” and it was listed among the top 10 algorithms of the 20th century. This historical journey from Fourier’s theoretical work to Cooley and Tukey’s efficient algorithm shows how a powerful idea, combined with computational innovation, enables the technology we use every day.

Understanding the Discrete Fourier Transform (DFT)

What exactly is the DFT?

In simple terms, the DFT is a mathematical operation that transforms a finite sequence of signal samples (time-domain) into an equal-length sequence of complex numbers (frequency-domain). Each complex number represents the amplitude and phase of a particular frequency component in the original signal. The DFT assumes the “N” samples represent one period of a periodic signal or a finite duration of a signal, and it essentially computes the coefficients of a combination of sinusoids needed to reconstruct those samples.

Mathematically, the DFT of a sequence \( x[n] \) (n = 0…N−1) is defined as:

In this formula:

- N is the total number of samples.

- \( e^{-j \frac{2\pi}{N}kn} \) is a complex exponential (Euler’s formula links this to cosines and sines).

- The result \( X[k] \) is generally complex-valued. Its real part comes from correlating the signal with a cosine wave of frequency \( k/N \) (relative to the sample rate), and the imaginary part comes from correlating with a sine wave.

- In essence, the DFT is checking “how much” of a cosine and sine of that particular frequency are present in the signal.

Let’s unpack that: The term \( e^{-j \frac{2\pi k n}{N}} \) can be rewritten as:

So we can think of \( X[k] \) as:

Here, the real part is: \( \Re\{X[k]\} = \sum x[n] \cos\left(\frac{2\pi k n}{N}\right) \), and the imaginary part is: \( \Im\{X[k]\} = -\sum x[n] \sin\left(\frac{2\pi k n}{N}\right) \).

If \( X[k] \) has a large real part (relative to the imaginary) for some \( k \), it means the signal aligns strongly with a cosine at that frequency; a large imaginary part means alignment with a sine.

Together, the magnitude is:

…and the phase is:

Typically, we plot the magnitude spectrum \( \left| X[k] \right| \) to see which frequencies are present in the signal and how strong they are.

For real-world signals that are real-valued (like most audio or sensor data), the DFT output has a symmetry: the second half of the output (frequencies above Nyquist) is the mirror image (complex conjugate) of the first half. So often we only plot or consider the first N/2 bins (from 0 up to Nyquist frequency).

Where is the DFT used?

Almost everywhere in engineering and science whenever we need to analyze or manipulate frequencies. In digital signal processing, the DFT is the cornerstone for analyzing the spectrum of signals. For example, audio engineers use it to visualize sound spectra or design filters (removing noise or boosting bass). Radio and communications engineers run DFTs to examine signals for modulation content or interference. In image processing, 2D DFTs help with filtering and restoration, and a cousin of the DFT (the Discrete Cosine Transform) is used for JPEG image compression to represent image blocks in terms of spatial frequencies. Even outside of traditional signal processing, the DFT pops up in solving differential equations, quantum physics (wavefunctions), and machine learning (feature extraction). Whenever a problem involves periodicity or frequency content, the DFT is likely to be involved. In summary, the DFT provides a frequency domain representation of data, which can reveal patterns not obvious in the time domain and enable powerful techniques for processing.

The Fast Fourier Transform (FFT) Algorithm Explained

While the DFT is incredibly useful, its direct computation using the formula above is slow for large \( N \).

For example, if \( N = 1000 \), the formula requires about \( 1000^2 = 1,000,000 \) multiplications – and the cost grows quadratically with \( N \). That’s where the Fast Fourier Transform (FFT) comes in.

💡 The FFT is not a different transform — it's the same DFT computed with a smarter algorithm. So, DFT vs FFT gives the same result — FFT is just a faster way to get it.

The most common FFT algorithm was published by Cooley and Tukey (1965). It uses the classic “divide and conquer” strategy:

- 🔀 Split the input into even and odd indexed samples:

- Evens: \( x[0], x[2], x[4], \ldots \)

- Odds: \( x[1], x[3], x[5], \ldots \)

- 🔁 Recursively compute the DFT of each half (evens and odds). This continues until size-1 DFTs (just the points themselves).

- 🦋 Combine the two halves using the "butterfly" pattern:

Where \( W_k = e^{-j \frac{2\pi k}{N}} \) is the twiddle factor, and \( k = 0, 1, ..., N/2 - 1 \). These operations form the butterfly structure in flow graphs.

By recursively halving and recombining, the FFT reduces computation to \( O(N \log N) \). For example:

- Naive DFT for \( N = 1024 \) → ≈ 1,048,576 operations

- FFT for \( N = 1024 \) → ≈ 10,240 operations

That’s over 100× faster — critical for real-time audio, image processing, and beyond 🚀

🔧 FFTs are not limited to powers of 2 — modern algorithms work with:

- Any composite length (mixed-radix)

- Prime sizes (prime factor algorithms)

- Real-valued optimizations

📦 Most FFT libraries (like FFTW, numpy.fft, etc.) can handle any \( N \) efficiently, though powers of 2 are usually fastest.

📏 Accuracy matters too: fewer operations = fewer rounding errors. In fact, FFTs are often more stable than naive DFTs for large \( N \).

✅ So: FFT is not only faster — it can be more accurate.

Pitfalls, Limitations, and Misconceptions

Despite its power, using the DFT/FFT isn’t magic – there are important considerations to keep in mind:

-

Sampling and Aliasing:

The DFT operates on sampled data, so if your sampling rate is not high enough to capture the signal’s highest frequency (see: Nyquist-Shannon sampling theorem), frequencies above half the sampling rate will “fold” into lower frequencies — a phenomenon known as aliasing. In practice, signals must be band-limited (e.g., with an analog filter) or sampled fast enough to avoid aliasing. -

Periodic Extension and Windowing:

The DFT inherently assumes the finite sequence you input is one period of a periodic signal. If your actual signal isn’t periodic in that window, the ends won’t align — causing spectral leakage (spreading energy into adjacent frequencies). Applying a window function (tapering to zero at the ends) reduces leakage, though at the cost of frequency resolution. -

Frequency Resolution:

The frequency bin spacing in a DFT is determined by the total duration of the signal. For example, 1 second of data yields 1 Hz resolution. To achieve finer resolution (e.g., 0.1 Hz), you need a longer signal (10 seconds) or apply zero-padding. Zero-padding smooths the spectrum but does not increase true resolution or reveal new frequency content — a common misconception. -

DFT Length and FFT Efficiency:

FFast Fourier Transformation algorithms work on data of any length, but they’re most efficient on lengths with small factors (like powers of 2, 3, 5, etc.). If possible, choose a length with such factors — or zero-pad your data. Remember, the FFT computes the DFT; adding more zeros only improves visualization, not signal detail. -

Interpretation of Results (Magnitude & Phase):

While the magnitude spectrum shows “how much” of each frequency exists in a signal, the phase spectrum shows “when” these components occur. Reconstructing a signal using only the magnitude and random phase will not yield the original. Complete analysis requires both magnitude and phase (or real and imaginary components). That said, phase is sometimes omitted in tasks like filtering or equalization. -

FFT vs DFT Misunderstandings:

The Fast Fourier Transformation is not a different transform; it is an efficient algorithm to compute the Discrete Fourier Transformation. It produces the same result (within numerical accuracy) as the direct DFT formula, but vastly faster. There’s no advantage to computing the DFT by definition. Also, while many assume FFTs only work on data sizes that are powers of 2, modern algorithms support arbitrary sizes efficiently. -

Boundary Effects and Filtering:

Using the DFT for convolution (like filtering via multiplication in the frequency domain) introduces wrap-around effects due to circular convolution. To perform correct linear convolution via FFT, you must use techniques like zero-padding, overlap-add, or overlap-save to avoid artifacts.

By keeping these points in mind, you can avoid common mistakes and make the most of Fourier analysis. The DFT and FFT are powerful tools — but they assume that the user understands the context of the signal and the mathematical implications of the transform. When used properly, they deliver highly accurate and insightful information about your data.

Conclusion

From a theoretical idea in the 19th century to an algorithm that underpins modern technology, the Fourier Transform has come a long way. We started with an intuitive notion: any signal can be seen as a combination of frequencies.

The Discrete Fourier Transform (DFT) is the mathematical tool that makes this insight concrete for digital data, and the Fast Fourier Transform (FFT) is the computational hero that makes it practical to use at scale. We’ve explored how the DFT works, how the FFT accelerates it, and how to interpret the results. We’ve also looked at real-world examples and applications — from music to images — and discussed common pitfalls to avoid.

Fourier analysis is everywhere in engineering and science — it’s truly one of those “superpowers” that, once learned, reveals itself in countless places. Whether you’re a college student beginning signal processing, an engineer designing a filter, or simply a tech enthusiast curious about how your MP3 player or digital camera works, understanding the DFT and FFT gives you a deeper appreciation of the frequency domain.

Fast Fourier Transformation

© Copyright 2025 - All Rights Reserved